International Conference on Machine Learning (ICML), 2025

arXiv /

Code

We present a new two-stage pipeline to identify many complex objects in real-world images without supervision.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025

arXiv /

Code

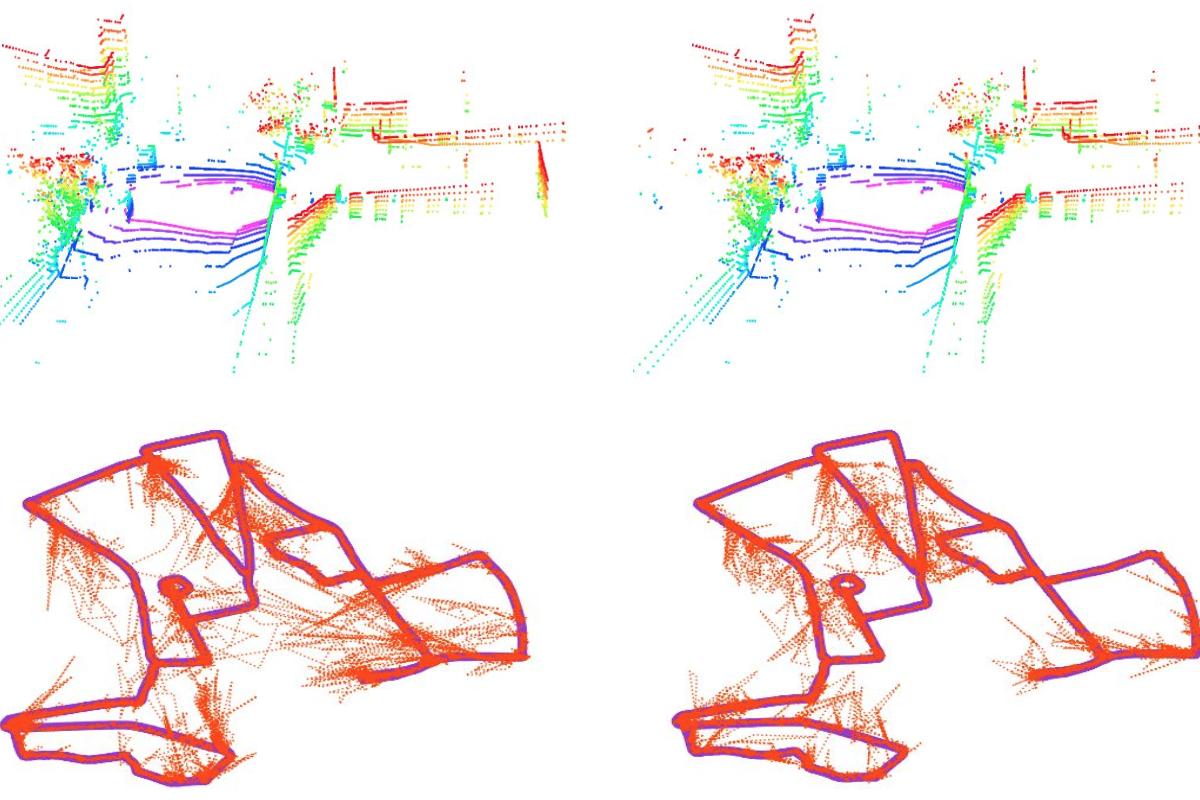

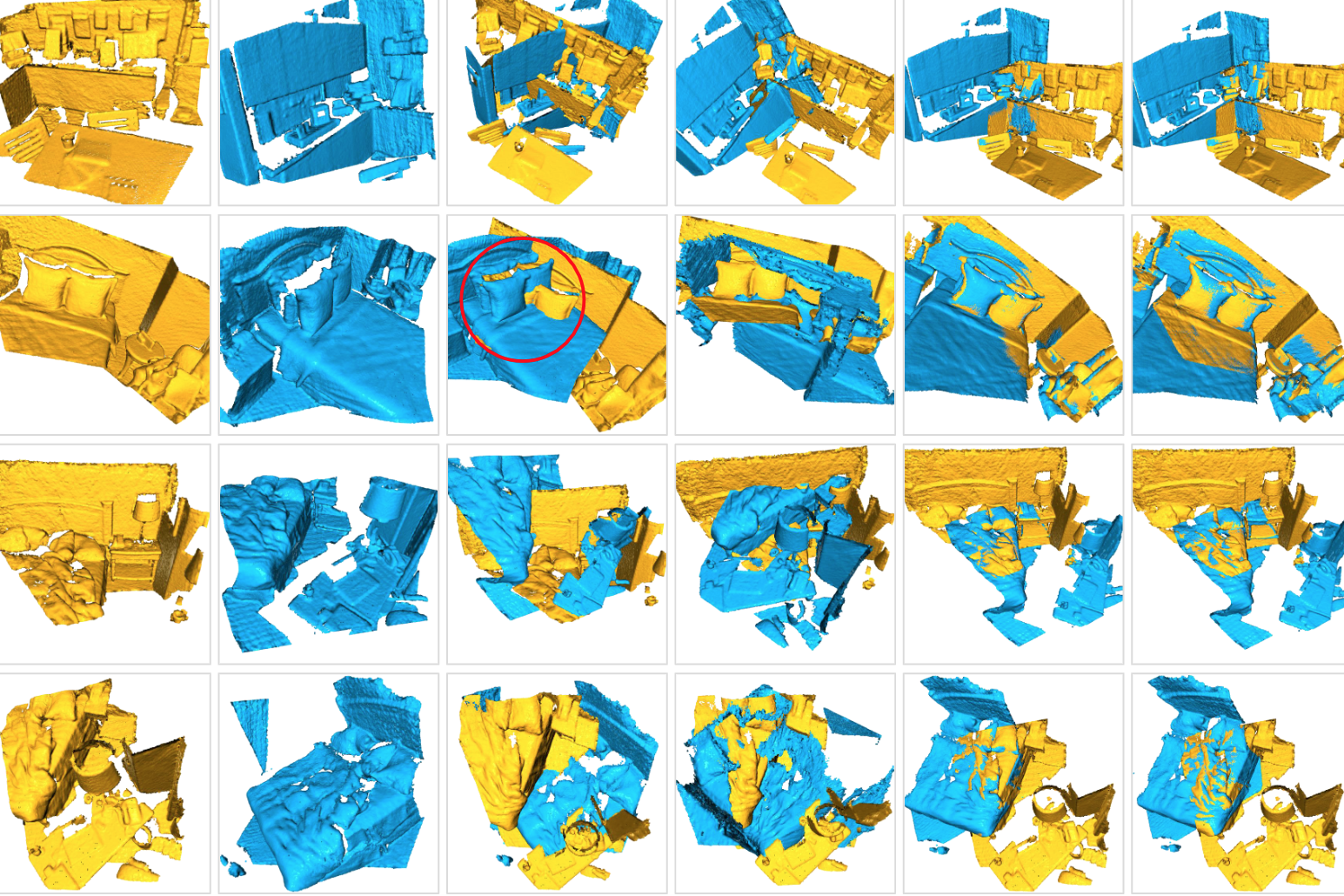

We present a new unsupervised learning method for 3D semantic segmentation, achieving SOTA performance.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2025

arXiv /

Code

We present a new framework that learns 3D geometry, appearance and velocity purely from multi-view videos, achieving SOTA performance in future extrapolation.

International Conference on Learning Representations (ICLR), 2025 (Spotlight, 380/11672)

arXiv /

Code

We present the first agent-based framework for unsupervised 3D object segmentation on point clouds.

International Conference on Machine Learning (ICML), 2024

arXiv /

Code

We present the first framework to represent dynamic 3D scenes in infinitely many ways from a monocular RGB video.

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2024 (IF=20.8)

IEEE Xplore /

Code

The journal version of our OGC at NeurIPS 2022. More experiments and analysis are included.

IEEE International Conference on Robotics and Automation (ICRA), 2024

Project Page

We present a novel framework to track and catch reactive objects in a dynamic 3D world.

International Journal of Computer Vision (IJCV), 2024 (IF=11.6)

arXiv /

Springer Access /

Code

The journal version of our paper at NeurIPS 2022. Complete benchmark and analysis are included.

Advances in Neural Information Processing Systems (NeurIPS), 2023

arXiv /

Code

We present a novel framework to simultaneously learn the geometry, appearance, and physical velocity of 3D scenes.

Advances in Neural Information Processing Systems (NeurIPS), 2023

arXiv /

Project Page /

Code

(* indicates corresponding author)

We propose a novel ray-based 3D shape representation, achieving a 1000x faster speed in rendering.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023

arXiv /

Code

(* indicates corresponding author)

We propose the first unsupervised 3D semantic segmentation method, learning from growing superpoints in point clouds.

International Conference on Learning Representations (ICLR), 2023

arXiv /

Tweet /

Code

(* indicates corresponding author)

We introduce a single pipeline to simultaneously reconstruct, decompose, manipulate and render complex 3D scenes.

IEEE International Conference on Robotics and Automation (ICRA), 2023

Project Page

We propose a generalizable framework for robotic manipulation.

Advances in Neural Information Processing Systems (NeurIPS), 2022

arXiv /

Video /

Code

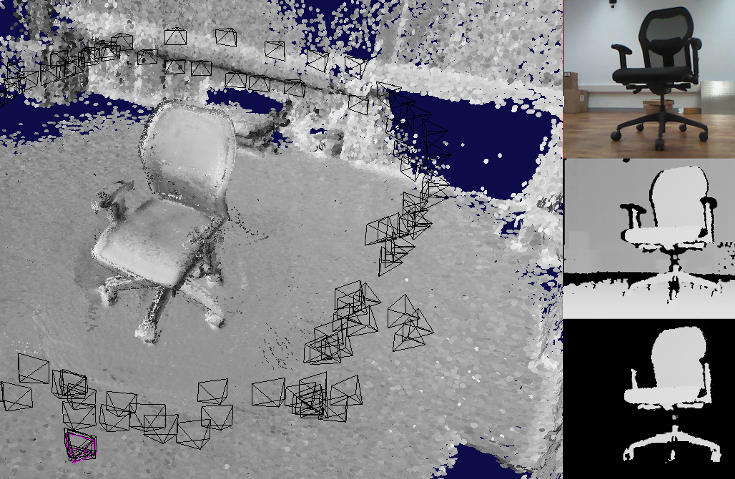

We introduce the first unsupervised 3D object segmentation method on point clouds.

Advances in Neural Information Processing Systems (NeurIPS), 2022

arXiv /

Project Page /

Code

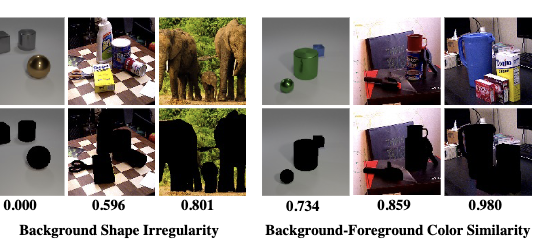

We systematically investigate the effectiveness of existing unsupervised models on challenging real-world images.

European Conference on Computer Vision (ECCV), 2022

arXiv /

Code

(* indicates corresponding author)

We introduce a simple weakly-supervised neural network to learn precise 3D semantics for large-scale point clouds.

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2022 (IF=16.39)

IEEE Xplore /

Code

The journal version of our SpinNet. More experiments and analysis are included.

arXiv /

Demo /

Project page

(* indicates corresponding author)

We propose a new method to recover the geometry and semantics of continuous 3D scene surfaces from point clouds.

International Journal of Computer Vision (IJCV), 2022 (IF=7.41)

arXiv /

Springer Access /

Demo /

Project page

(* indicates corresponding author)

The journal version of our SensatUrban. More experiments and analysis are included.

IEEE Sensor Journal, 2022 (IF=3.30)

arXiv /

IEEE Xplore

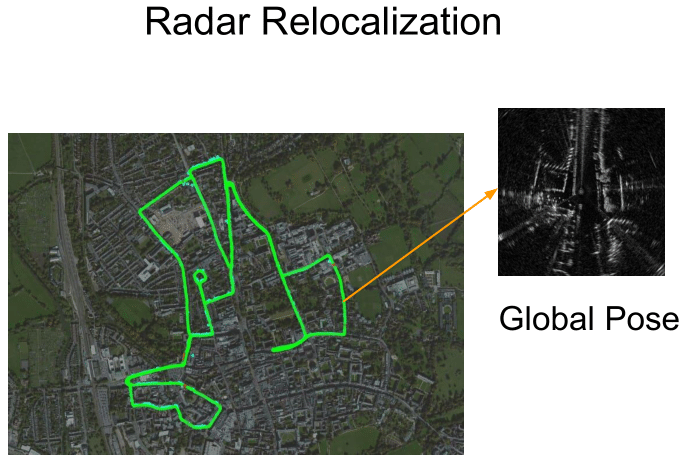

We present a learning-based LiDAR relocalization framework to efficiently estimate 6-DoF poses from LiDAR point clouds.

IEEE International Conference on Computer Vision (ICCV), 2021

arXiv /

News:

CVer /

Code

We introduce a simple implicit neural function to represent complex 3D geometries purely from 2D images.

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2021 (IF=16.39)

arXiv /

IEEE Xplore /

Code

(* indicates corresponding author)

The journal version of our RandLA-Net. More experiments and analysis are included.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021

arXiv /

Code

(^ indicates equal contributions)

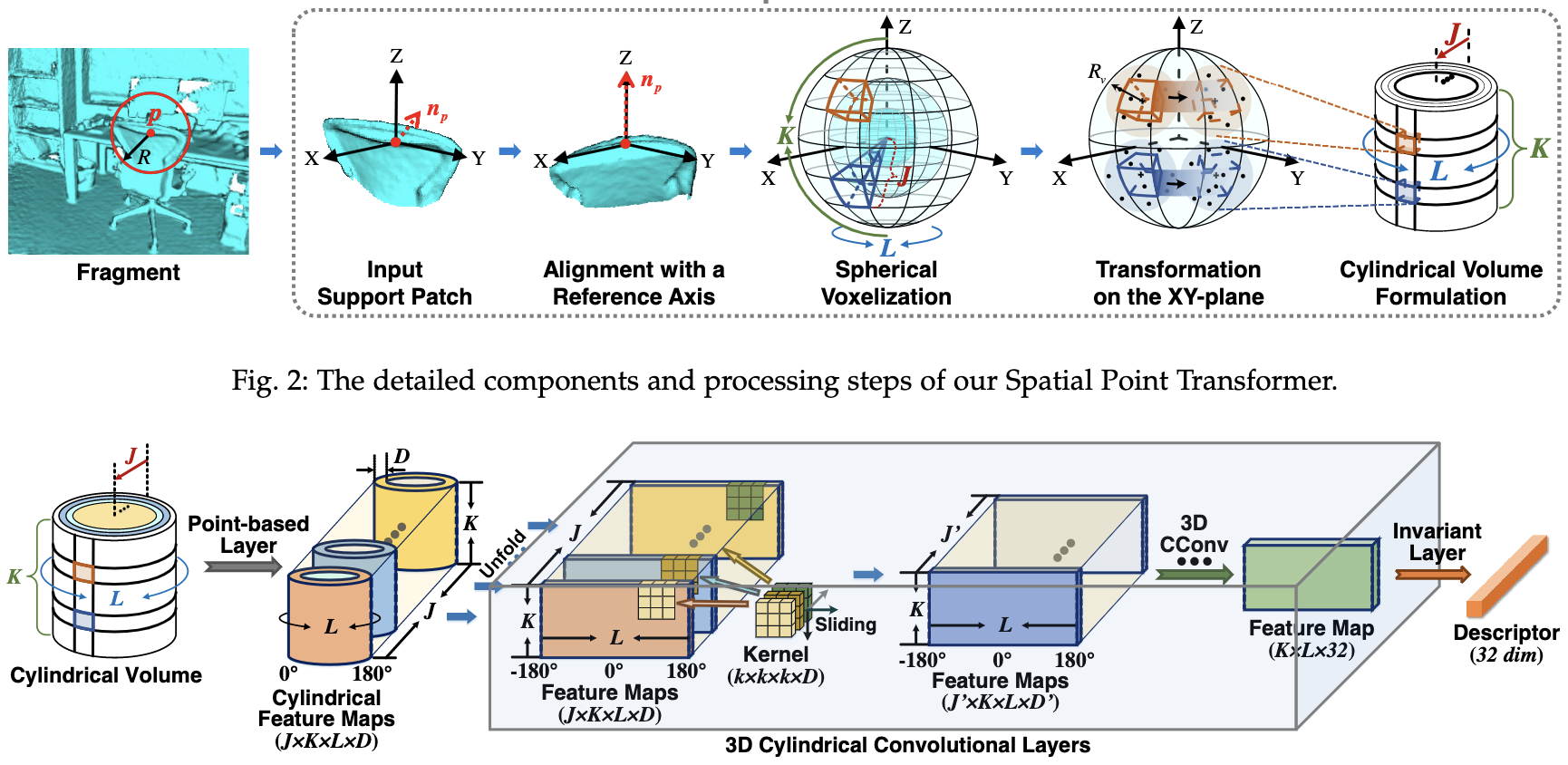

We introduce a simple and general neural network to register pieces of 3D point clouds.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021

arXiv /

Demo /

Project page

(* indicates corresponding author)

We introduce an urban-scale photogrammetric point cloud dataset and extensively evaluate and analyze the state-of-the-art algorithms on the dataset.

IEEE International Conference on Robotics and Automation (ICRA) , 2021

arXiv /

IEEE Xplore

We introduce a simple end-to-end neural network with self-attention to estimate global poses from FMCW radar scans.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020

arXiv /

Semantic3D Benchmark /

News:

(新智元,

AI科技评论,

CVer) /

Video /

Code

(* indicates corresponding author)

We introduce an efficient and lightweight neural architecture to directly infer per-point semantics for large-scale point clouds.

Advances in Neural Information Processing Systems (NeurIPS), 2019 (Spotlight, 200/6743)

arXiv /

ScanNet Benchmark /

Reddit Discussion /

News:

(新智元,

图像算法,

AI科技评论,

将门创投,

CVer,

泡泡机器人) /

Video /

Code

We propose a simple and efficient neural architecture for accurate 3D instance segmentation on point clouds. It achieves the SOTA performance on ScanNet and S3DIS (June 2019).

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019

arXiv /

IEEE Xplore

We propose a novel end-to-end deep parallel neural network to estimate the 6-DOF poses using consecutive 3D point clouds.

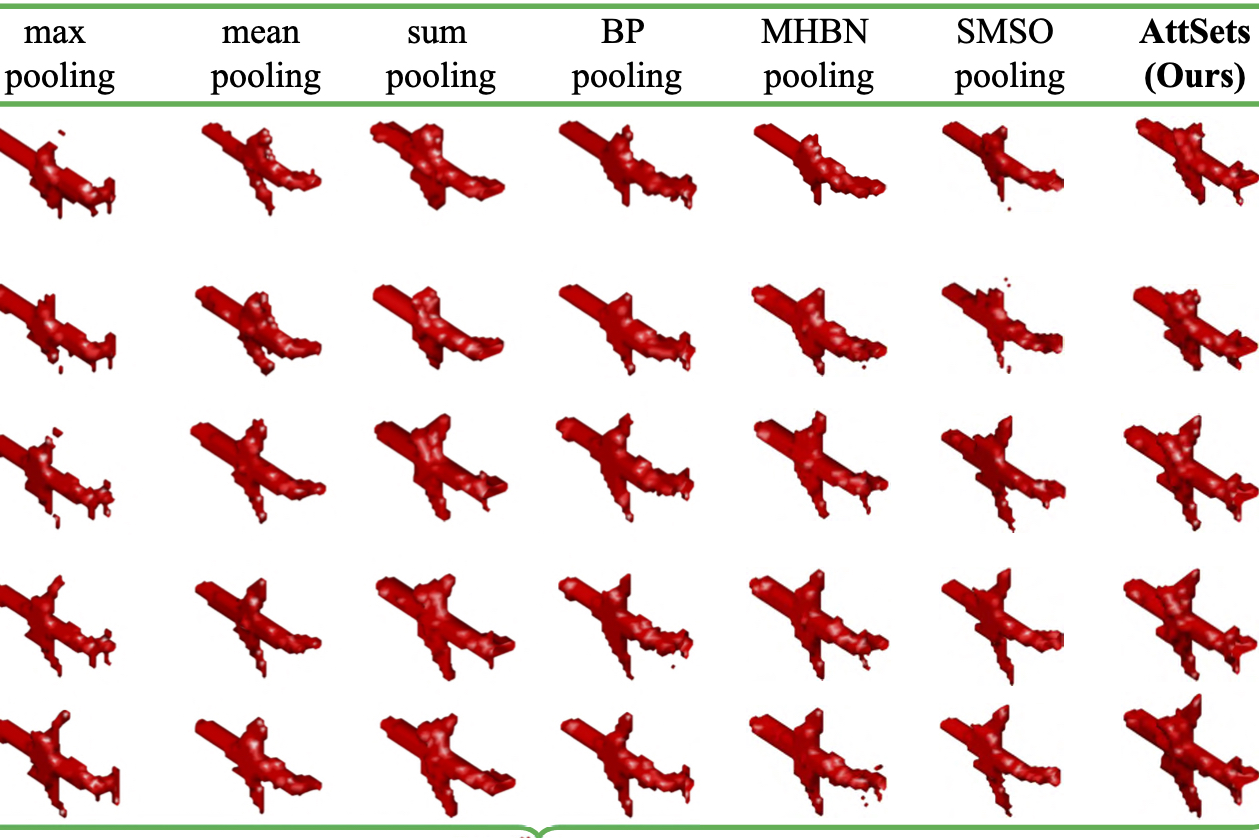

International Journal of Computer Vision (IJCV), 2019 (IF=6.07)

arXiv /

Springer Open Access /

Code

We propose an attentive aggregation module together with a training algorithm for multi-view 3D object reconstruction.

IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR-W), 2019

CVF Open Access

We propose a simple embedding learning method that jointly optimises for an auto-encoding reconstruction task and for estimating the corresponding attribute labels.

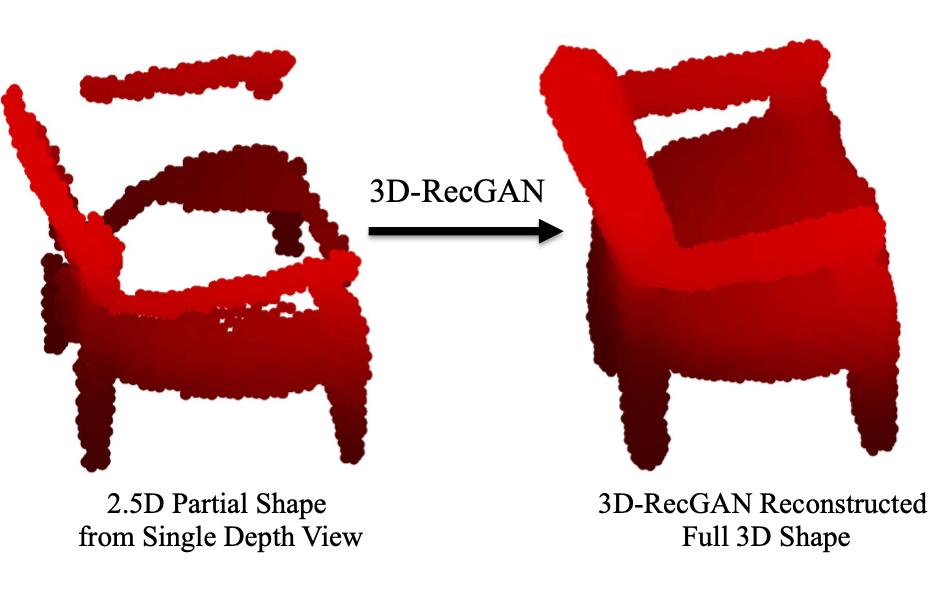

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2018 (IF=17.73)

arXiv /

IEEE Xplore /

Code

We propose a novel neural architecture to reconstruct the complete 3D structure of a given object from a single arbitrary depth view using generative adversarial networks.

International Joint Conference on Artificial Intelligence (IJCAI), 2018

arXiv /

Code

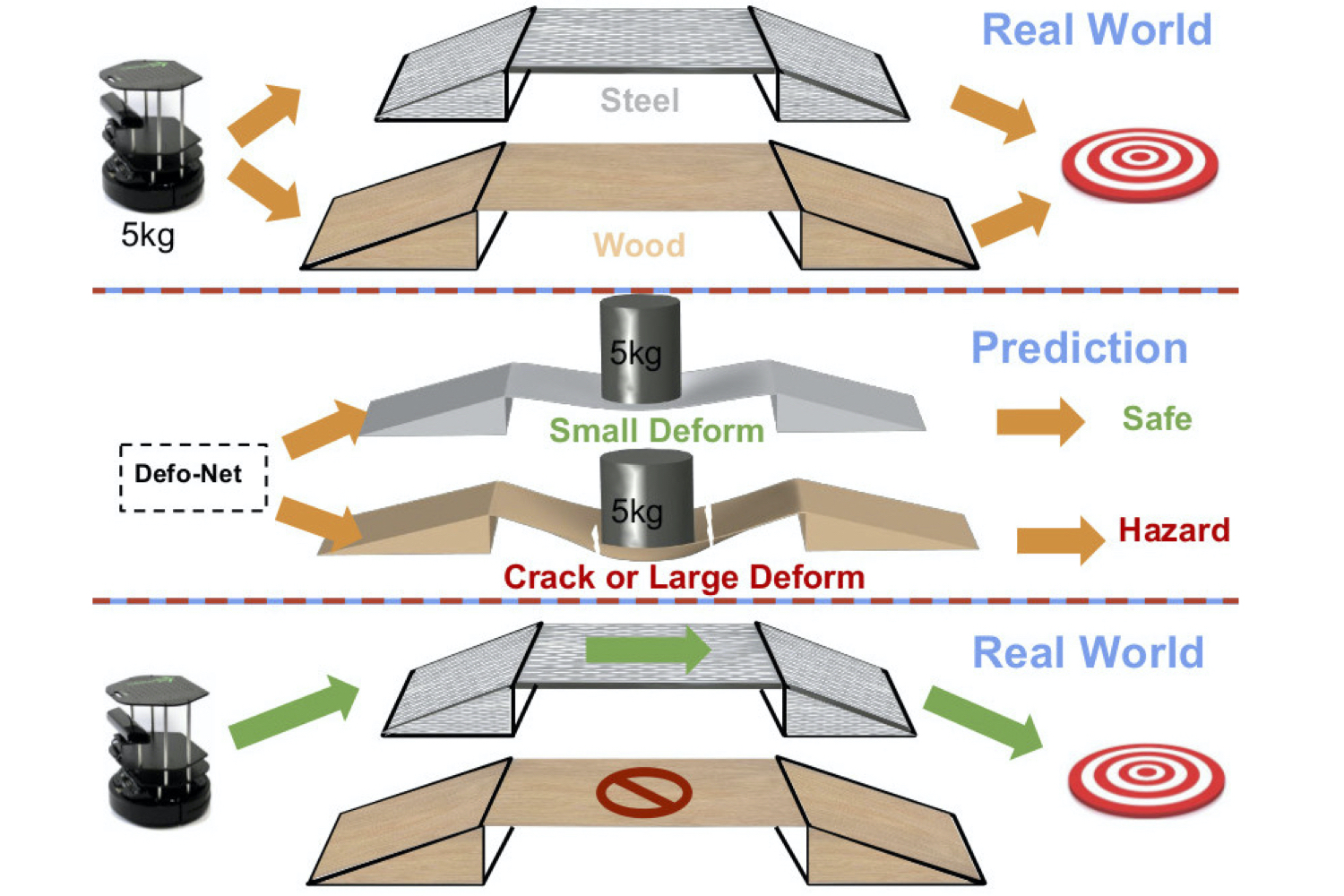

We present a neural framework to predict how a 3D object will deform under an applied force using intuitive physics modelling.

IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR-W), 2018

CVF Open Access /

IEEE Xplore

(* indicates equal contribution)

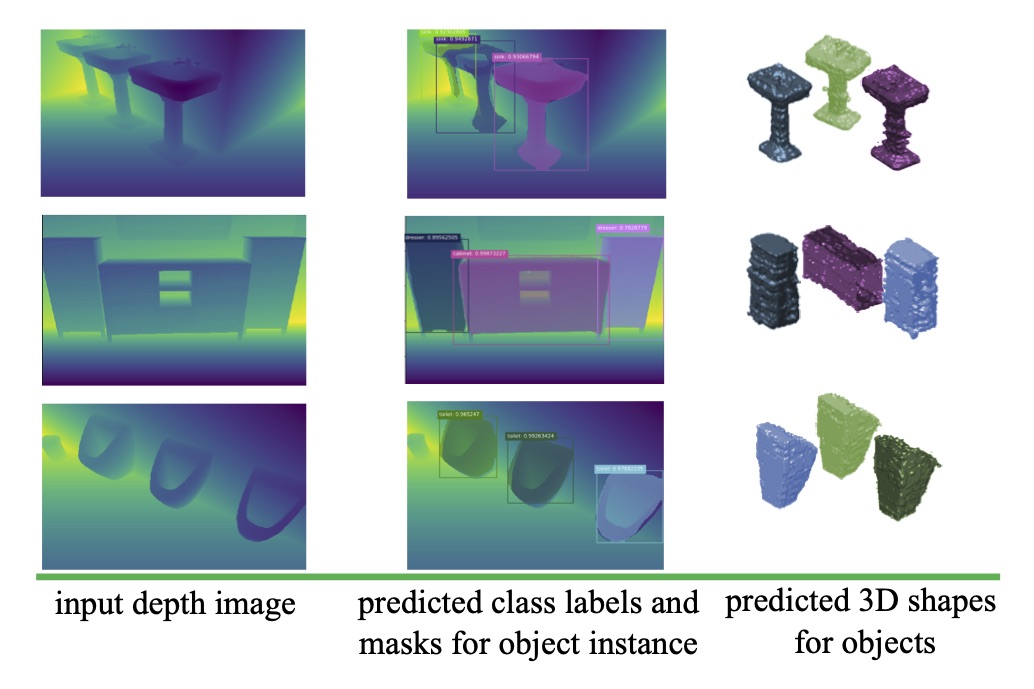

We propose an efficient and holistic pipeline to simultaneously learn the semantics and structure of a scene from a single depth image.

IEEE International Conference on Robotics and Automation (ICRA) , 2018

arXiv /

IEEE Xplore /

Video /

Code

We present a novel generative adversarial network to predict body deformations under external forces from a single RGB-D image.

IEEE International Conference on Computer Vision Workshops (ICCV-W) , 2017

arXiv /

IEEE Xplore /

News: 机器之心 /

Code

We propose a novel approach to reconstruct the complete 3D structure of a given object from a single arbitrary depth view using generative adversarial networks.